New Article: Five Steps to Taking the Guesswork Out of Project Budgeting

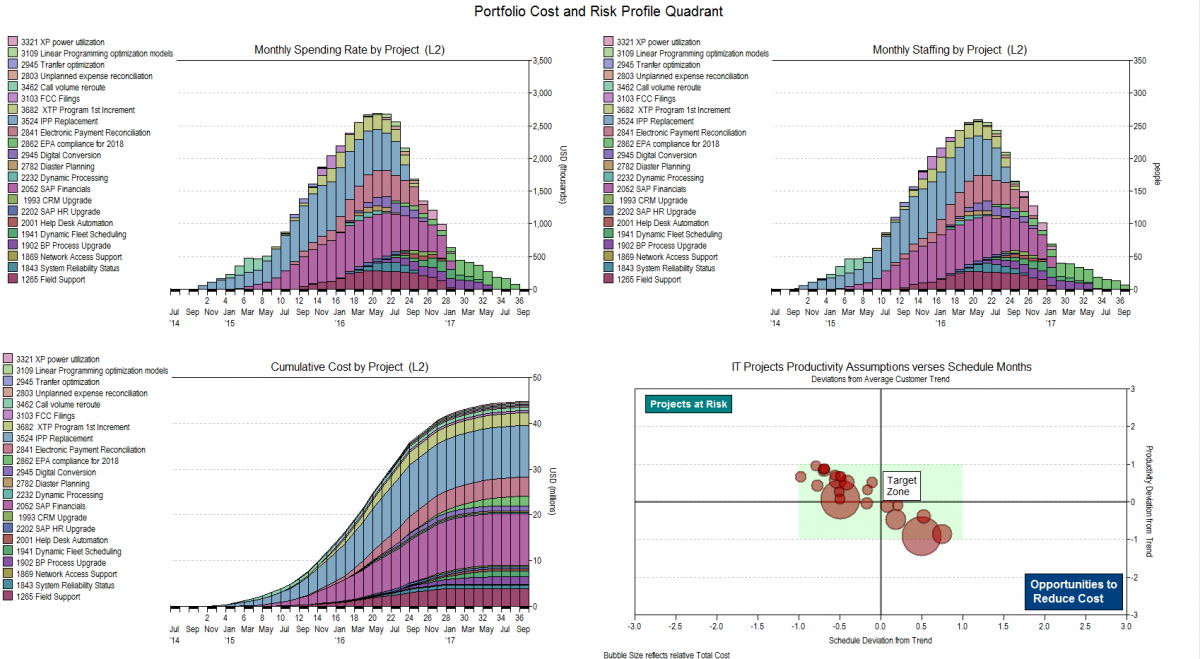

IT project budgeting is a necessary evil in every organization, but it’s becoming increasingly apparent that traditional approaches aren’t incredibly effective. It is possible to make this challenging task better by breaking with tradition and thinking about budgeting differently. Today, most organizations approach budgeting in an overly simplistic manner: a manager makes a call for project estimates and receives responses based on expert opinions, task-based spreadsheets, anticipated budget restrictions, available resources, and – let’s face it – wild guesses. Most often, the estimates are nothing more than a collection of hours, with no differentiation by job role or schedule. This inefficient process can result in 40 percent of projects missing their marks, simply because they weren’t budgeted accurately. Let’s take the guesswork out of the equation. In this article for Bright Hub Project Management, QSM's Doug Putnam provides a few simple steps to ensure that your projects remain on point – and on budget.

Recently a friend of mine sent me a link to a

Recently a friend of mine sent me a link to a  There are so many questions around agile planning, one of the biggest being: do we need an

There are so many questions around agile planning, one of the biggest being: do we need an

Recently the correlation between seeking the best gasoline prices and

Recently the correlation between seeking the best gasoline prices and