Webinar Replay: How to Estimate Reliability for On-Time Software Development Webinar

If you were unable to attend our recent webinar, "How to Estimate Reliability for On-Time Software Development," a replay is now available.

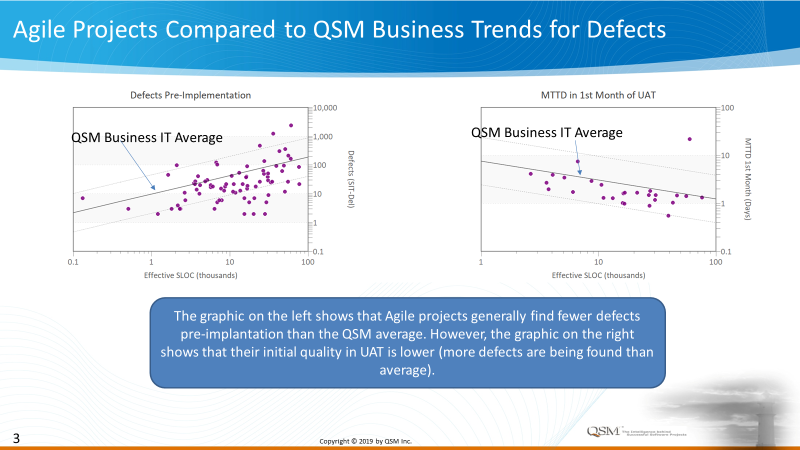

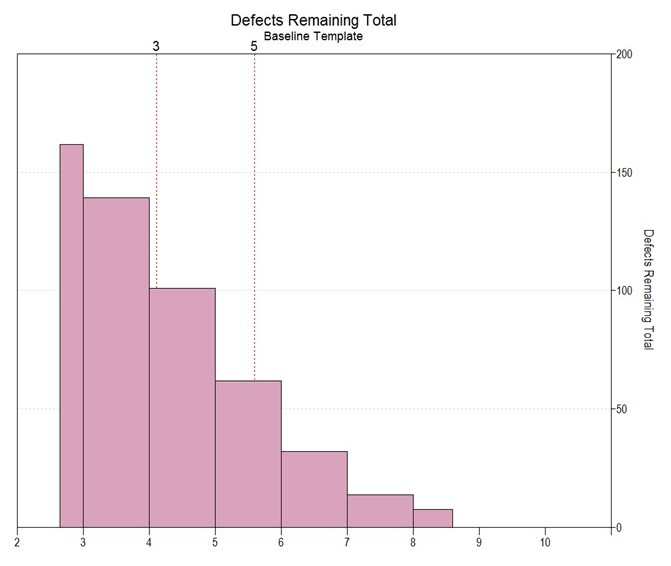

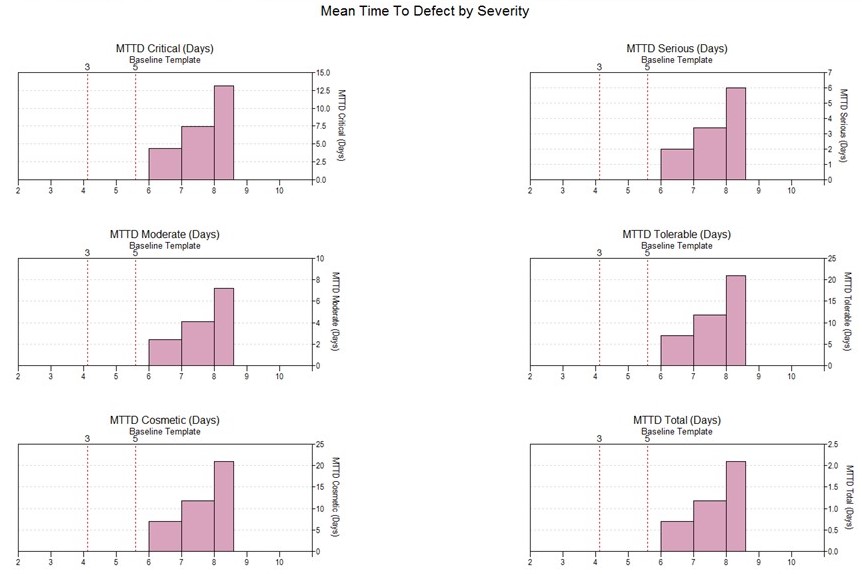

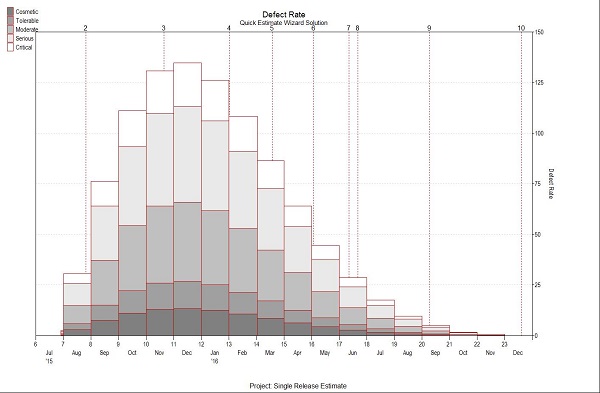

Software development is a major investment area for thousands of organizations worldwide. The negotiation and early planning meetings often revolve around major cost and schedule decisions. But one of the most important factors, reliability, often gets left behind in these early discussions. This is unfortunate since early reliability estimates can help ensure that a quality product is delivered and predict if it will finish on-time and within budget. In this webinar, Keith Ciocco shows how to leverage the QSM model-based tools to estimate and track the important reliability numbers along with cost, scope, and schedule.

This presentation includes a lively Q&A session with the audience and covers such topics as:

With the most recent spurt of inclement weather, there is really no denying that winter is here. After awaking to about 4 inches of snow accumulation, I begrudgingly bundled myself up in my warmest winter gear and proceeded to dig out my car. Perhaps the brisk air woke me up faster than usual because as I dug a path to the car, I began to think about software testing, specifically how effective early testing can reduce the chances of

With the most recent spurt of inclement weather, there is really no denying that winter is here. After awaking to about 4 inches of snow accumulation, I begrudgingly bundled myself up in my warmest winter gear and proceeded to dig out my car. Perhaps the brisk air woke me up faster than usual because as I dug a path to the car, I began to think about software testing, specifically how effective early testing can reduce the chances of