QSM Database Now Includes More Than 13,000 Completed Projects

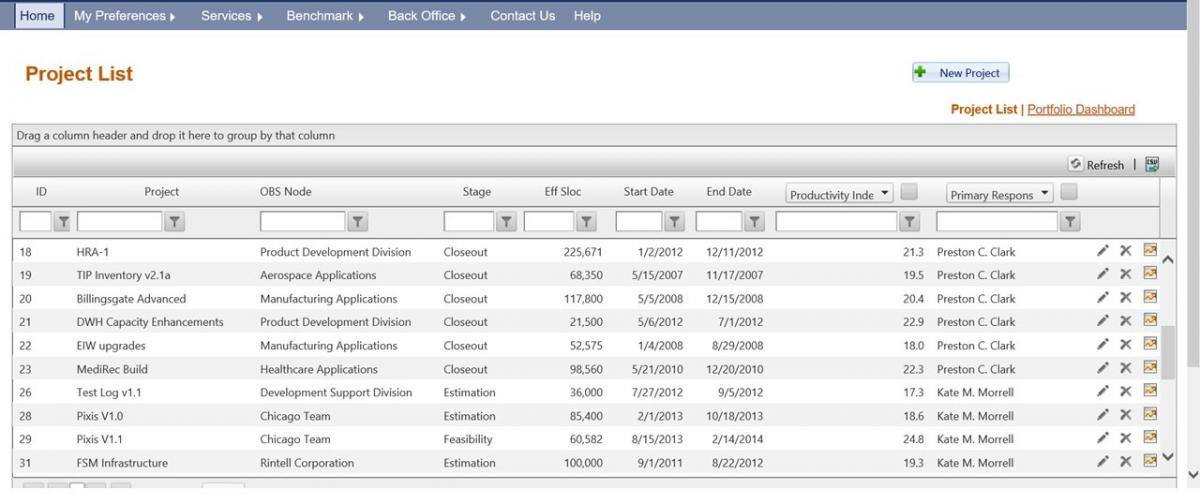

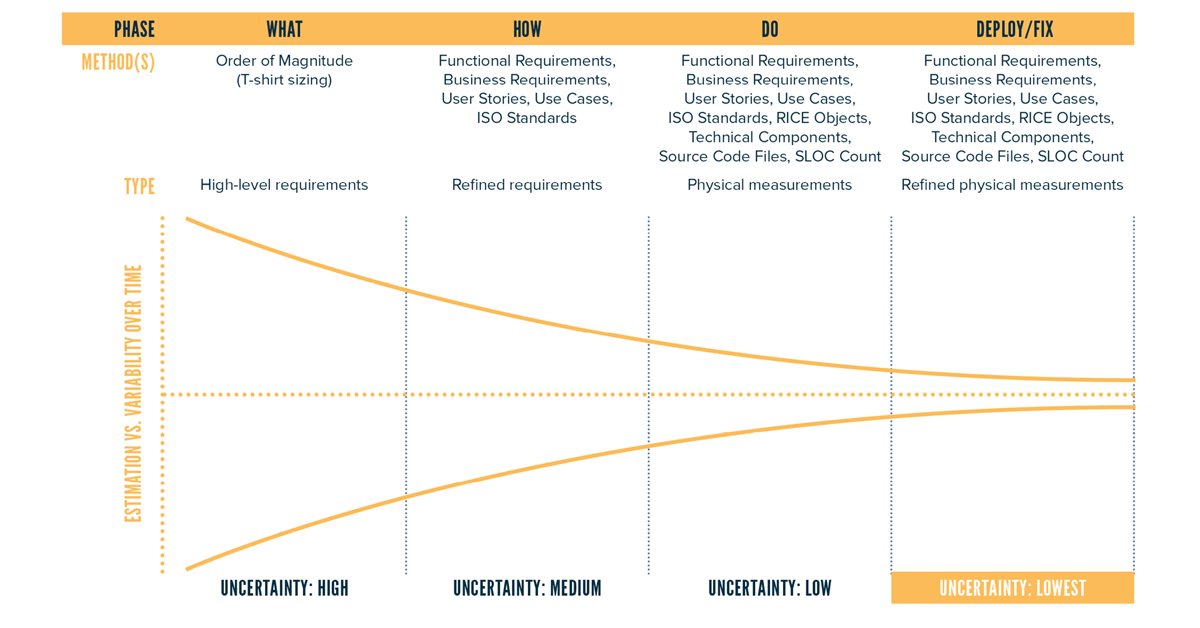

QSM is pleased to announce a major update to the QSM Database, the largest continuously-updated software project performance metrics database in the world. With this update, we have validated and added more than 2,500 projects to the database in 9 major application domains (Avionics, IT, Command & Control, Microcode, Process Control, Real Time, Scientific, System Software, and Telecom) and 45 sub-domains, resulting in a current total of more than 13,000 completed projects.

With this update, the number of agile projects in the database increased by 340%, resulting in some changes to the agile trend line. Specifically: