How to Measure Software Project Scope

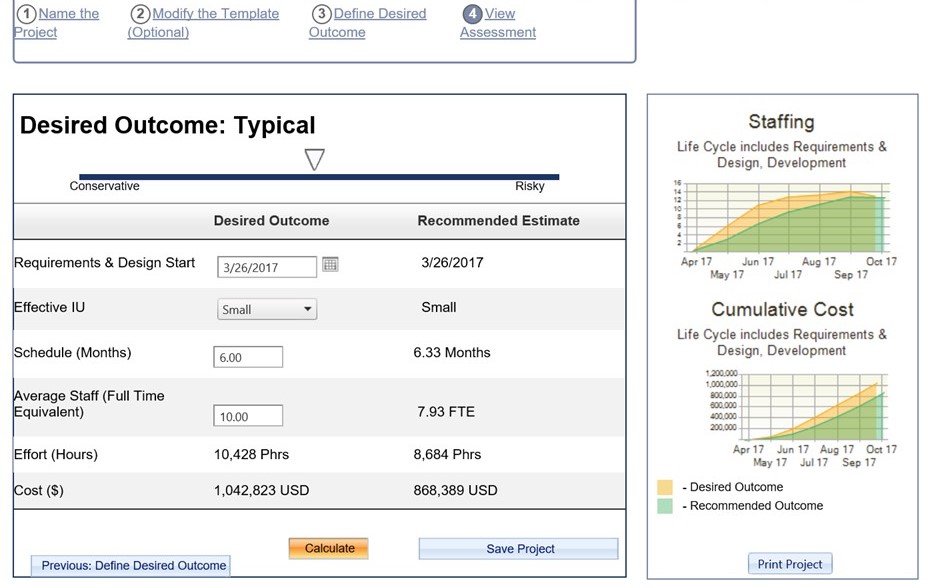

You intuitively know that the scope of your software development project determines your team size, schedule, and budget. How do you measure software project scope, especially when you don’t have much information to go on?

Episode 3: Sizing Terms & Metrics is part of our Software Size Matters video series that explores how you not only can, but should be using software size measures at every stage of software project, program, and portfolio management. In Episode 1, we introduced QSM's Software Lifecycle Management top-down scope-based project estimating simulation model. In Episode 2, we explained why quantifying software size is so important. In this episode, we'll discuss sizing terms and metrics you're likely familiar with and already using.

Watch Episode 3: Sizing Terms & Metrics

Size is one of Five Core Metrics used to understand and manage software projects.