"The game ain't over 'til it's over." - Yogi Berra

Baseball season is here and with apologies to the late Mr. Yogi Berra, “it’s like déjà vu all over again.”

Why would a project team or program management office (PMO) take the time and spend the resources to collect information about a project that was just completed? Isn’t this the time when victory is declared and everyone runs for the hills? In many cases, delving into what happened and what actual costs and durations were incurred can seem like an exercise in self-flagellation.

Historical data is arguably the most valuable input available in the software estimation process. While other inputs such as size and available duration or staffing can be seen as constraints, properly collected historical data moves the activity from the realm of estimating closer to “factimating.”

In some cases, project managers (PMs) use “engineering expertise” to develop an estimate. While this is a very valid estimating technique if properly performed, historical performance should also be a factor in the process. The fastest player at the 2015 NFL Scouting Combine was J.J. Nelson, a University of Alabama Birmingham wide receiver. His time in the 40 yard dash was 4.28 seconds. This was a real piece of historical data that can be used as a comparison or performance measure. However, if one asked Mr. Nelson if he could attain a time of 4.00, his answer might be tempered by the fact that his time was the absolute best that he could do. So why would a project manager or estimator not take into consideration previous performance when they develop estimates? The vast majority of software developers may be justifiably optimistic in their assumption of team capabilities, requirements stability and budget availability, however most experienced PM’s will admit that not much goes as planned.

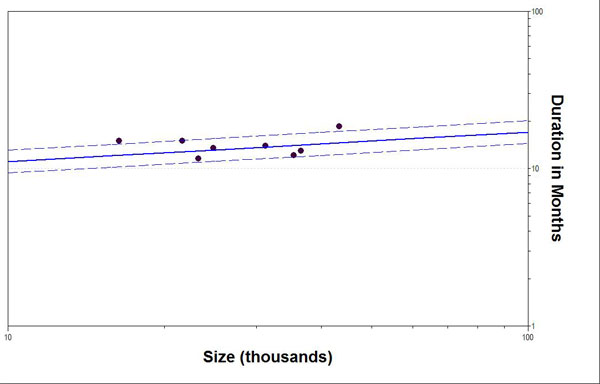

As an example, the graphic below depicts a log-log plot of historical projects for one organization using size and duration as metrics. Mean values are shown as the solid blue line, while the dashed lines represent ± 1 standard deviation. Data from completed projects are shown as black dots. The plot demonstrates that as size increases, duration increases, which should be no surprise to most PMs.

If a duration estimate for a project of approximately 120,000 size units is 2 months as depicted by the red “x,” it should be fairly obvious that this accelerated schedule is far shorter than the organization’s historical performance. Of course, this historical view compares only duration to size, and an argument might be that the PM will just throw the “entire student body” at the project in order to shorten the duration. In this case an analysis of historical staffing profiles by project size would be in order to determine if this approach has succeeded in the past. Concurrently, an analysis of historical quality performance could be completed to demonstrate that higher staffing profiles almost always generate markedly higher defect generation.

Additionally, if one is using the SLIM-Suite of tools, statistically validated trend lines for a large number of metrics is available, as is the ability to create trend lines shown in the figure above. Trend lines are developed from historical data and present statistically bounded views of an organization’s or an industry’s actual performance. As depicted above, the relative position of an estimate against actual historical data can paint an interesting picture that affords analysts and decision-makers a straightforward view of the validity of an estimate.

However, one should be cautious about historical data that looks “too good.” In the graphic above, the variation in performance, illustrated by the standard deviation lines, is very slight across a wide range of size metrics. Taken at face value this presentation would seem to indicate that the organization has tight schedule control across a range of project sizes. This may actually be a true statement, but this is not the hallmark of the average organization, and one might consider digging a little more deeply into the source of the data and its incorporation into any analysis tool.

Hence, history can be a two-edged sword. George Santayana noted: “those who do not remember the past are condemned to repeat it.” This maxim certainly applies in the software world.

Gathering historical data can be highly comprehensive or limited to certain core metrics. The data can be collected for the entire project as a whole or preferably by “phase.” The phase is dependent on the software development lifecycle. Agile projects can and should also collect the data, if not by sprint, then certainly by release.

When beginning the data collection process, it is important to identify potential sources of data. Usually this information can be found in the following artifacts:

- Software lifecycle methodology

- Project schedule chart used in briefings

- Microsoft Project® file, Clarity® / PPM® export, or similar detailed planning tool

- Requirements documents

- Staffing profiles

- Defect reports

At a minimum it is advisable to collect:

- Size - in terms of what work has to be accomplished. This could be Source Lines of Code, User Stories, Epics, Function Points, etc.

- Start Date, End date (Duration)

- Effort

- Peak Staff

- Defects

Data collection is the foundation of project estimation, tracking, and process improvement. When historical data is collected across a PMO or enterprise it is recommended that the data be stored in a data repository that can be accessed for estimating future projects or for individual project forensic analysis.

Establishing a repository of historical data can be useful for a variety of reasons:

- Promotes good record-keeping,

- Can be used to develop validated performance benchmarks,

- Accounts for variations in cost and schedule performance,

- Supports statistically validated trend(s) analysis,

- Helps make estimates “defensible”

- Can be used to bound productivity assumptions.

One must also be careful in the collection and use of historical data. Consistency in data collection is important since currency values, size differences, “typical” hours per month values and defect severity levels should all be normalized. Some of the problems linked to the use of poor-quality data include:

- poor project cost, and schedule estimation

- poor project cost, and schedule tracking

- inappropriate staffing levels, flawed product architecture and design decisions

- ineffective and inefficient testing

- fielding of low quality products

- ineffective process change

In short, an organization’s measurement and analysis infrastructure directly impacts the quality of the decisions made at all levels, from practitioners and engineers through project and senior managers. The quality (and quantity) of the historical data used in that infrastructure is crucial.

Those organizations fortunate enough to have licensed the SLIM-Suite of tools already have all the resources needed to not only store the historical data, but to perform analysis of the data and build custom metrics regarding that data. The use of the metrics and data for estimating and controlling projects is provided by seamless interfaces to the SLIM-Estimate an SLIM-Control tools.