Has Software Productivity Declined Over Time?

Peter Hill of ISBGS poses an interesting question:

Has software productivity improved over the last 15 years? What do you think? Perhaps it doesn't matter as long as quality (as in defect rate) as improved?

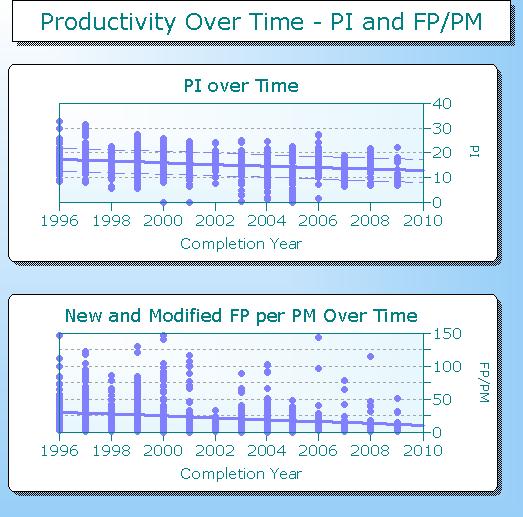

Two widely used productivity measures are Function Points/Person Month and QSM's PI (or productivity index). To answer Peter's question, I took a quick look at 1000 medium and high confidence business systems completed between 1996 and 2011. Here's what I found:

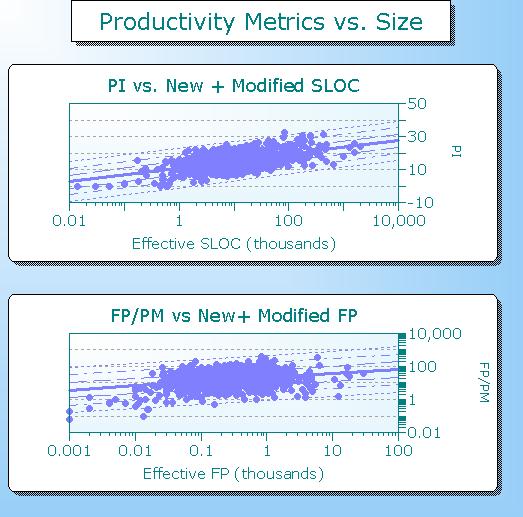

Whether we look at PI or FP/PM, the story's the same - on average, productivity has actually decreased over time. What could be causing this? One possible explanation is the correlation between measured productivity and the volume of delivered functionality. As the next chart shows, regardless of the metric used average productivity increases with project size:

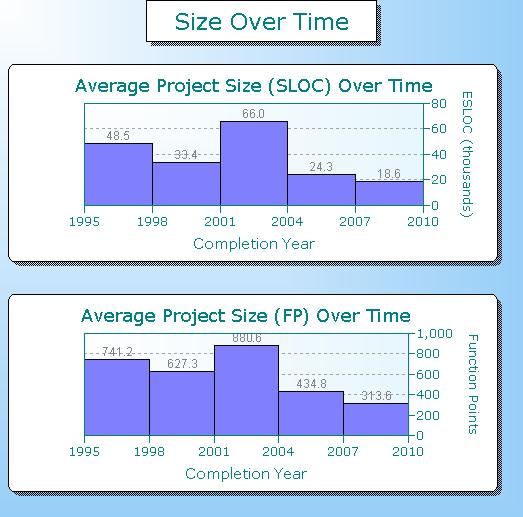

Which led me to wonder: what has happened to average project size over time? Again, regardless of whether the delivered functionality was measured in SLOC or Function Points, the story was the same: projects are getting smaller.

Peter's question is a good example of why we often need more than one metric to interpret the data. More on that topic coming up shortly!