An Empirical Examination of Brooks' Law

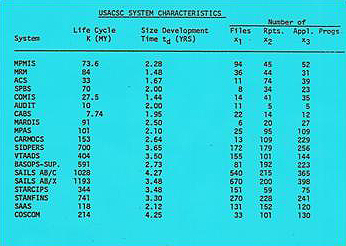

Building on some interesting research performed by QSM's Don Beckett, I take a look at how Brooks' Law stacks up against a sample of large projects from our database:

Does adding staff to a late project only make it later? It's hard to tell. Large team projects, on the whole, did not take notably longer than average. For small projects the strategy had some benefit, keeping deliveries at or below the industry average, but this advantage disappeared at the 100,000 line of code mark. At best, aggressive staffing may keep a project's schedule within the normal range of variability.

Contrary to Brooks' law, for large projects the more dramatic impacts of bulking up on staff showed up in quality and cost. Software systems developed using large teams had more defects than average, which would adversely affect customer satisfaction and, perhaps repeat business. The cost was anywhere from 3 times greater than average for a 50,000 line of code system up to almost 8 times as large for a 1 million line of code system. Overall, mega-staffing a project is a strategy with few tangible benefits that should be avoided unless you have a gun pointed at your head. One suspects some of these projects found themselves in that situation: between a rock and a hard place.

How do managers avoid these types of scenarios? Software development remains a tricky blend of people and technical skills, but having solid data at your fingertips and challenging the conventional wisdom wisely can help you avoid costly mistakes.