Several weeks ago I read an interesting study on finding bugs in giant software programs:

The efficiency of software development projects is largely determined by the way coders spot and correct errors.

But identifying bugs efficiently can be a tricky business, when the various components of a program can contain millions of lines of code. Now Michele Marchesi from the University of Calgiari and a few pals have come up with a deceptively simple way of efficiently allocating resources to error correction.

...Marchesi and pals have analysed a database of java programs called Eclipse and found that the size of these programs follows a log normal distribution. In other words, the database and by extension, any large project, is made up of lots of small programs but only a few big ones.

So how are errors distributed among these programs? It would be easy to assume that the errors are evenly distributed per 1000 lines of code, regardless of the size of the program.

Not so say Marchesi and co. Their study of the Eclipse database indicates that errors are much more likely in big programs. In fact, in their study, the top 20 per cent of the largest programs contained over 60 per cent of the bugs.That points to a clear strategy for identifying the most errors as quickly as possible in a software project: just focus on the biggest programs.

Nicole Tedesco adds her thoughts:

... my guess is that bugs follow a Pareto "80/20" rule: the buggiest modules, that is the modules with the highest number of bugs per line of source code (bug density) will be found in the largest of a system's modules which in turn contain about 80% of the entire system's code. Note that I am not saying that 80% of the bugs will be found in 80% of the source code. The average bug density will not be evenly distributed amongst all modules, but rather skewed towards the largest of them.

Software development is a distinctly linguistic process. An interesting statistic in linguistics is that about 20% of the characters in any human language appears in about 80% of the words of that language. This can be explained by "network", or "connection" effects between a languages characters. The general rule of thumb for networks (maps of nodes and their connections) is that 20% of the nodes consume 80% of the connections. In the real world this explains the phenomenon of the most popular web sites (about 20% of the web sites), Google for example, consume the majority of links from other sites (about 80%). Like with any other kind of linguistic expression, large software modules are also exercises in network effects and probably obey 80/20 rule behavior in terms of bug potential, performance hits, code complexity and so on.

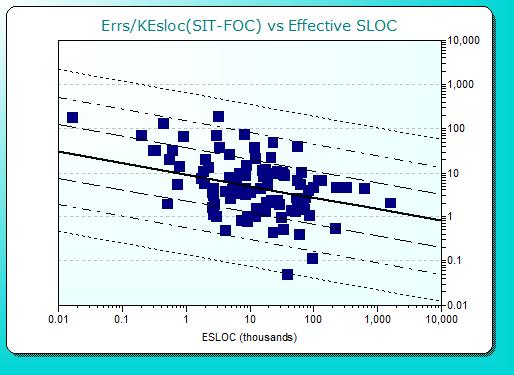

Nicole's observation may well explain the puzzling discrepancy between the MIT study's conclusion and my own observations of defect density vs. size in the QSM database. The following is taken from a sample of 100 recent IT projects which reported defect data in all 5 of QSM's default severity categories (that last condition was included to ensure an apples to apples comparison between projects in this data set):

You'll note that, unlike the MIT study, this graph shows average defect density declining as project size (defined as new + modified code) increases. The same behavior was observed when measuring Total Size (new + modified + unmodified code). If anything, the reduction in defect density as projects grew larger was even more marked.

But then I'm not sure we're measuring the same type of project included in the Eclipse database. Our projects are all - or at least predominently - standalone projects or releases as opposed to modules that will be assembled into a larger product. That may be one explanation for the differing behavior. Another may be that the MIT graph plotted % of Defects (in the program as a whole?) against % of Programs (the percent of the entire program's size represented by individual software modules?). That's a different metric.

Still another possibility is that (as Tedesco observed earlier) the real driver behind defect density is not actually code size but connectivity. In an object oriented world, this makes a lot of sense: the more different modules make use of Module A, the higher the probability something in Module A will conflict with one of the many other modules it must exchange information and interact with. In many ways, the object model is an enormous complexity factor in and of itself.

At any rate, it's an interesting question. I'd be interested to know if anyone has taken a look at the relationship between defect density and project size with their own data?