Derived PI: Is PI from Peak Staff “Good Enough”?

Are you having a hard time collecting total effort for SLIM Phase 3 on a completed project?

Can you get a good handle on the peak staff?

Maybe we can still determine PI!

It is difficult and often time consuming to collect historical metrics on completed software projects. However, some metrics are commonly easier to collect than others, namely, peak staff, start and end dates of Phase 3 and the size of the completed project. Asking these questions can get things started:

- So, how many people did you have at the peak?

- When did you start design and when was integration testing done?

- Can we measure the size of the software?

That gives us the minimum set of metrics to dig up.

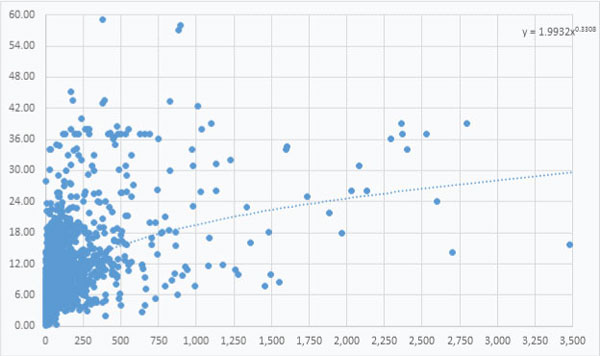

However, the PI (Productivity Index) formula also requires phase 3 effort. Can we use SLIM to generate a PI that is useful, using peak staff instead of total effort?

A statistical test on historical metrics can answer this question.

What are we comparing?

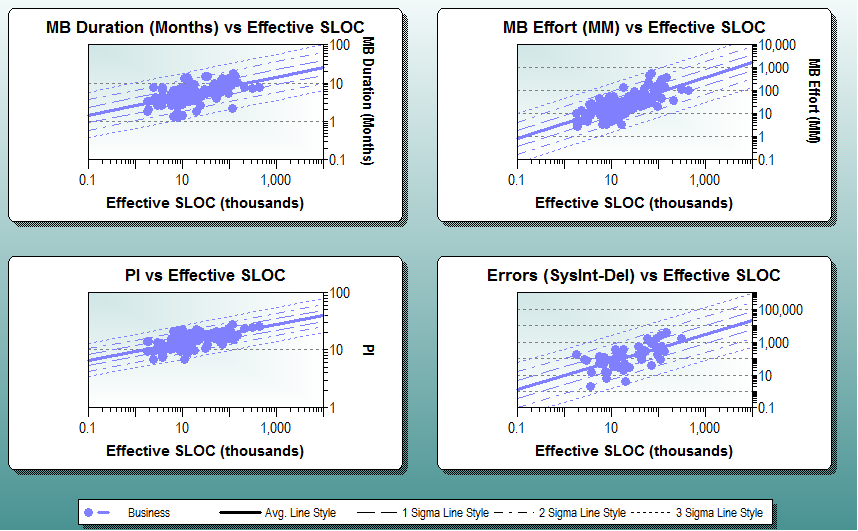

- Projects used in this study had all 4 of the following: actual reported effort; size; peak staff; duration.

- For each project, a derived effort is generated from peak staff, size and duration.

- A derived PI is generated from the derived effort, size and duration. This derived PI is then compared to the actual PI.

Definitions for terms: