I am a professional software project estimator. While not blessed with genius, I have put in sufficient time that by Malcolm Gladwell’s 10,000 hour rule, I have paid my dues to be competent. In the past 19 years I have estimated over 2,000 different software projects. For many of these, the critical inputs were provided and all I had to do was the modeling. Other times, I did all of the leg work, too: estimating software size, determining critical constraints, and gathering organizational history to benchmark against. One observation I have taken from years of experience is that there is overwhelming pressure to be more precise with our estimates than can be supported by the data.

In 2010 I attended a software conference in Brasil. As an exercise, the participants were asked to estimate the numerical ranges into which 10 items would fall. The items were such disparate things as the length of coastline in the United States, the gross domestic product of Germany, and the square kilometers in the country of Mali: not things a trivia expert would be likely to know off hand. Of 150 participants, one person made all of the ranges wide enough. One other person (me) got 9 out of 10 correct.

What does this tell us? Firstly, even though they knew next to nothing about what they were estimating, most of the attendees produced more precise ranges than what was appropriate given their knowledge of the data. Another important thing to note here is that in this case, the pressure to be more precise came from within: there was no external pressure to specify tight ranges (which there often is for software estimates).

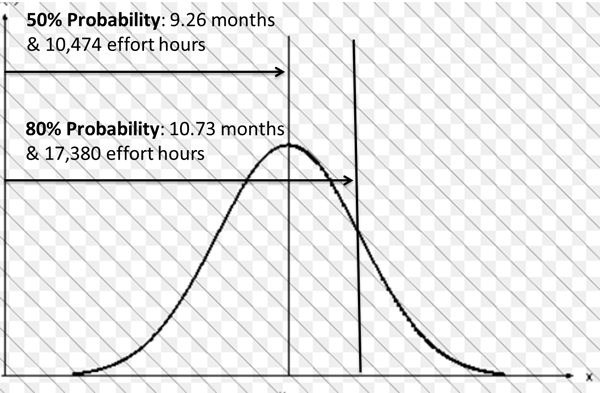

Acknowledging our desire to be more precise than we should, how can we account for uncertainty and still produce estimates that are useful for project planning? Whether you are using SLIM-Estimate or a tool based on the COCOMO II model, the default estimate produced is a “50% estimate”. It represents the high point on the normal distribution curve at which schedule, cost, and effort are equally likely to be above it or below.

At 50% probability of meeting your estimated schedule and budget, you are as likely to fail as you are to succeed. To manage risk and improve the odds of success, projects must buffer their 50% work plans and commit to an outcome with a higher level of certainty. The buffer accounts for the unknowns which are part of every project (all of which translate into more time, effort, and expense than we typically plan for).

At 50% probability an equal number of outcomes under the curve will be above and below the number at the center of the distribution. At 80% 4/5ths will be less than the number where the vertical line intersects the curve.

This is where the width of the normal curve is important. If you have a high level of confidence in the estimation inputs, it is reasonable to expect the range of the curve to be narrower. In this kind of low risk scenario, the difference between a 50% and a 80% probability outcome for cost or schedule is not so dramatic. If (as is frequently the case with early estimates made before the requirements have been completed) the estimate inputs are highly uncertain, the curve’s range is wider and the differences between a 50% or 80% probability outcome become more pronounced.

What are the items we’re most likely to be uncertain about in an estimate? The list is long and ever changing. In fact, we don’t know all the things we’re uncertain about, the degree of uncertainty, nor what their interactions are. After all, it is difficult to account for things that have not yet been identified! The SLIM-Estimate model has a simple and elegant solution to this problem and summarizes uncertainty into three items:

- size (what the project creates),

- productivity (how effective the project is at doing its work), and

- labor rate

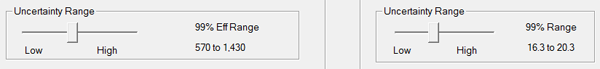

The snapshot below illustrates how uncertainty ranges are set in SLIM-Estimate around productivity and size.

When setting reasonable uncertainty ranges, software estimators must check the urge to be overly precise. Uncertainty ranges should be sufficiently wide so that 99 times out of 100 the actual project size and productivity fall within them. I find it helpful to explain uncertainty as, “99 times out of 100 rather” than “I have 99% confidence”. With the first, I imagine the project at its conclusion and 99 times out of 100 both size and productivity fell within the boundaries I established. When I think about 99% confidence I feel like a salesperson being optimistic about closing a deal (and that certainly doesn’t happen 99 times out of 100!). It’s important to imagine worst case as well as best case scenarios when establishing estimation confidence boundaries.

In the background, SLIM-Estimate uses uncertainty ranges around estimation inputs to perform Monte Carlo simulation to determine the width of the uncertainty ranges for estimation outputs (cost, effort, and project duration).

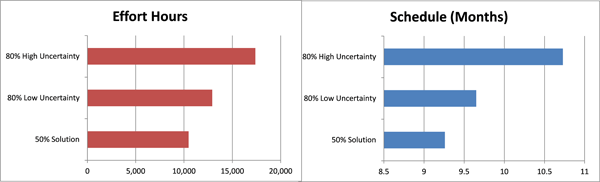

Let’s compare an estimate for a 1,000 function point project where the uncertainty ranges are low to one where they are high. There is no difference between the 50% assurance level values for low and high uncertainty estimates. However, at an 80% assurance level for schedule and effort the influence of uncertainty is readily apparent.

| 50% Solution | Low Uncertainty (80%) | High Uncertainty (20%) | |

| Schedule | 9.26 months | 9.65 Months | 10.73 Months |

| Effort | 10,474 Effort Hours | 12,925 Effort hours | 17,380 Effort hours |

In this example, high uncertainty adds a month to the risk buffered schedule and 34% to the risk buffered effort (4,455 hours). Software project schedules that slip by 34% are not that uncommon. So, from a project planning perspective, selecting high uncertainty levels for both size and productivity looks like the more defensible choice.

Our natural tendency is to attempt to be as precise as possible. When the data are not there to support pinpoint precision the responsible choice is to embrace that uncertainty and produce estimates that account for the fact that software projects contain unknowns that can potentially affect cost, schedule, and effort.

While the observed tendency is to be overly precise with uncertainty ranges, there is a downside to making them too large: the ranges for cost, effort, and schedule can become too wide to be meaningful. An excellent way to determine appropriate uncertainty ranges is, of course, to use metrics from either industry data or your own completed projects! If SLIM-DataManager is your metrics repository, you can enter variances for estimated and actual schedule and effort on the Review tab. Whatever storage method you use, be sure to capture variances. They paint an accurate picture of how your organization develops software and can guide you in establishing meaningful uncertainty ranges.