The time has come, once again for QSM’s annual March Madness tournament. As we enter our 6th year of friendly office competition, I looked back at some of my previous strategies to help me figure out how I wanted to go about completing my bracket this year. In doing this, I realized that many of these concepts can be applied towards IT project management.

Three years ago, I built my bracket around an emotional desire for my preferred team to win. I paid very little attention to their previous performance that season, or any of the other teams for that matter. Needless to say, I did not do as well as I had hoped that year. Unfortunately, this strategy is applied fairly frequently in software estimation, with stakeholders committing their teams to unreasonable schedules and budgets for projects that are “too big to fail.” Committing to a plan based off of the desired outcome does not produce a good estimate, and often results in cost overruns and schedule delays (or in my case, quite a bit of ridicule from the Commish).

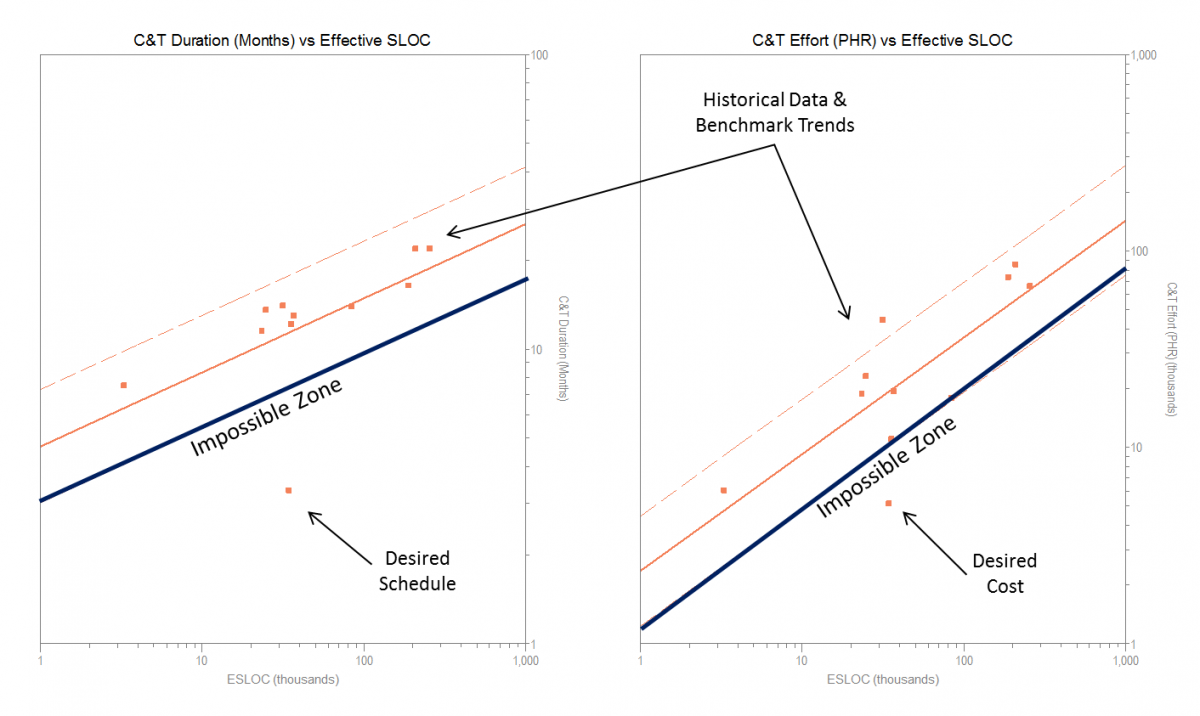

Keeping that in mind, the next year I decided to implement a different strategy. I made my picks based on the historical performance of each of the teams that season (i.e. had Yahoo sports auto-fill my bracket), and I did significantly better in the office pool. This method closely mimicked how project managers can use historical data to estimate future software projects based off of past performance. Historical, trend-based estimation can help uncover when project expectations are grossly unreasonable (see below), and then suggest more favorable alternatives.

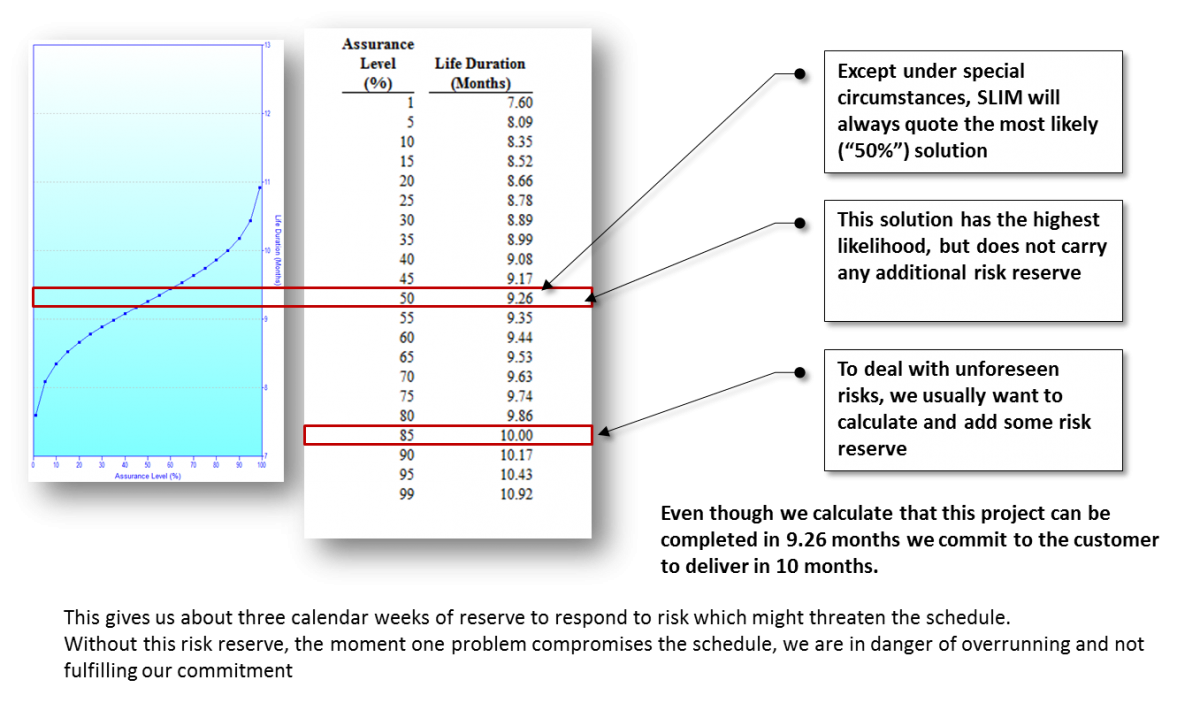

Of course, engaging in any predictive activity involves taking on some sort of risk. Cinderella stories happen all the time in college basketball, and likewise, there are a number of factors which could ultimately impact the outcome of a software project. It’s impossible to eliminate all risk in software estimation, but using historical data can allow you to do a risk assessment. From there, one can more effectively manage said risk by using that knowledge to make better business decisions.

If I know that the expected outcome of a project has a lower probability of being accomplished, I can decide whether it’s worth taking on that additional risk, and perhaps build in a buffer to ensure that the project is delivered on time and within budget.

Taking on additional risk has the potential for great rewards, but only if it is done knowingly. This year, I decided to do a head to head comparison of the two strategies I’ve used in the past. On the left side of my bracket, I allowed the team’s historical performances to determine the winners. On the right side, I made my picks based off of my emotional desire for UVA to win.

UVA has had a pretty good season so far, so maybe I can afford to take a calculated risk by having them make it to the championship game in my bracket. Besides, in this situation there are few consequences for me taking on some additional risk. If UVA ends up losing, the only thing I’m missing out on is bragging rights and, perhaps, subjecting myself to some additional ridicule from my esteemed colleagues. If I was playing for $1 million that could be applied towards my program budget, I’d probably make my decisions a little differently.

Only time will tell the outcome of a software project, or the winner of the QSM March Madness pool. Over the next few weeks as the competition plays out, we’ll see if taking a calculated risk worked in my favor, or if I should alter my strategy for 2017.