New Video: How to Use Project History for Early Software Decisions

Early project decisions, when not much is known, are easily the hardest. They're also often the most critical. Maybe you've found yourself in a position where you need to communicate to stakeholders what your work is going to cost and how long it will take to deliver. Feeling the pressure to deliver, you might have to make decisions based on gut feel instead of past performance. This can lead to setting unrealistic targets and often results in projects going late or over budget.

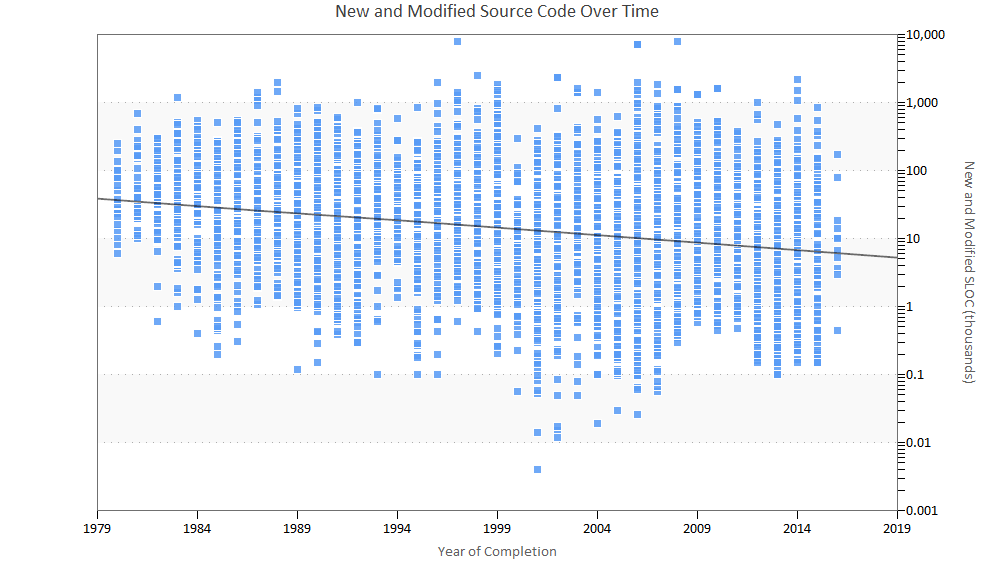

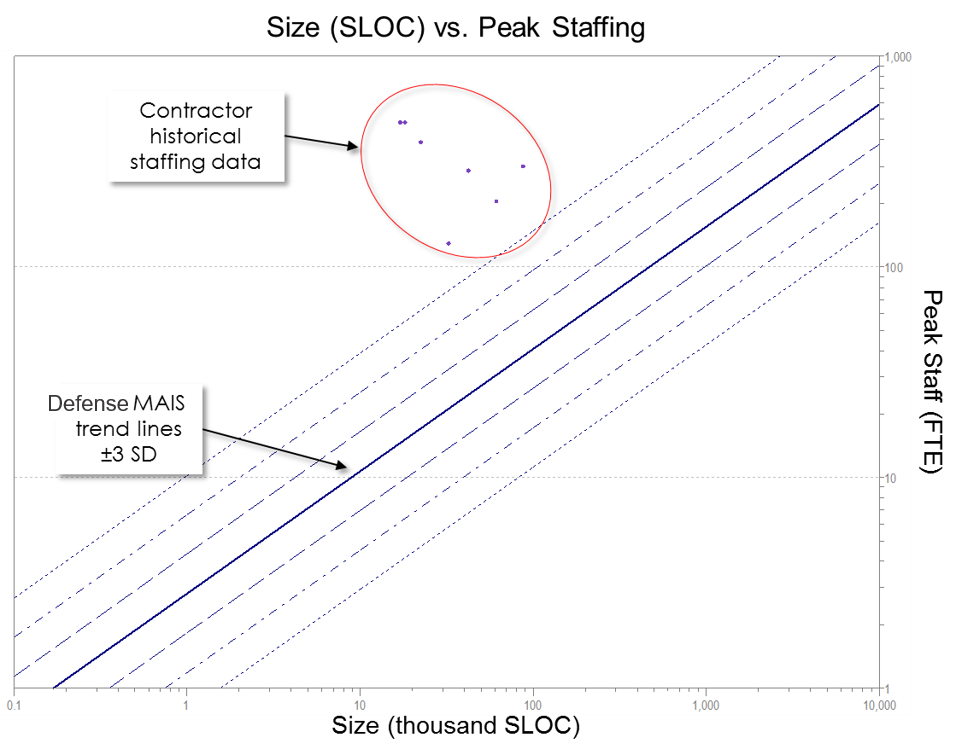

At QSM, this is when we recommend turning to historical data. Whether it's your own data or trendlines from the 13,000 validated projects in the QSM industry database, leveraging actual completed projects can make your estimates more reliable.

Believe it or not, collecting your own project history isn't as difficult as it sounds. We recommend capturing just a few basic metrics: Functionality Delivered, Total Effort, and Total Duration. Once you have this information, you can calculate a Productivity Index, which is the measure of productivity for the overall project or release. Then all of these metrics can be leveraged by any of the other project lifecycle tools in the SLIM-Suite for estimating, tracking, and benchmarking.

In the video above, you can see how easy it is to gather your own completed projects to use early in the planning process and determine if your estimates are reasonable or not. This helps you understand the big picture before you make any important project or portfolio decisions.

Dear Carol:

Dear Carol: