QSM Announces Latest Update to the QSM Project Database

We are pleased to announce the the latest update to the QSM Project Database! The 8th edition of this database includes more than 10,000 completed real-time, engineering and IT projects from 19 different industry sectors.

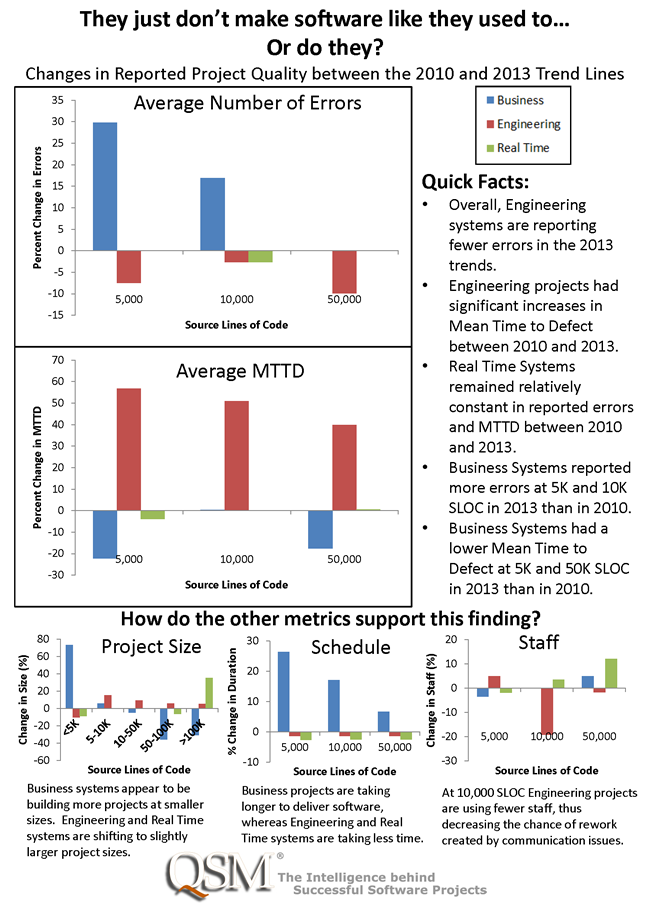

The QSM Database is the cornerstone of our business. We leverage this project intelligence to keep our products current with the latest tools and methods, to support our consulting services, to inform our customers as they move into new areas, and to develop better predictive algorithms. It ensures that the SLIM Suite of tools is providing customers with the best intelligence to identify and mitigate risk and efficiently estimate project scope, leading to projects that are delivered on-time and on-budget. In addition, the database supports our benchmarking services, allowing QSM clients to quickly see how they compare with the latest industry trends.

To learn more about the project data included in our latest update, visit the QSM Database page.